We’re at the brink of an open-source hardware revolution, and if you’ve been paying attention, you can feel it. The recent hype around DeepSeek and OpenAI isn’t just about AI models—it’s a symptom of a much bigger shift. The ethos of open technology is creeping into every layer of engineering, and robotics is next.

Yann LeCun, a thought leader in the open-source AI space has been preaching the importance of open source systems for years, and he’s right: proprietary tech creates silos, but open-source tech fuels innovation. The Linux moment for software unlocked an era of exponential development, and now robotics is standing at that same precipice. But there’s a problem: while software has flourished in open ecosystems, hardware has remained shackled to proprietary ecosystems, bloated costs, and restrictive access.

Why Hardware Needs Its “Linux Moment”

For decades, robotics has been stuck in a world where cutting-edge technology is locked behind closed doors. If you wanted precision robotics, you had to shell out a fortune for high-end industrial arms. If you wanted to experiment with teleoperation, you were forced to work within expensive, closed-loop systems that stifled customization and tinkering. It’s an ecosystem that discourages progress for anyone outside well-funded research labs or massive corporations.

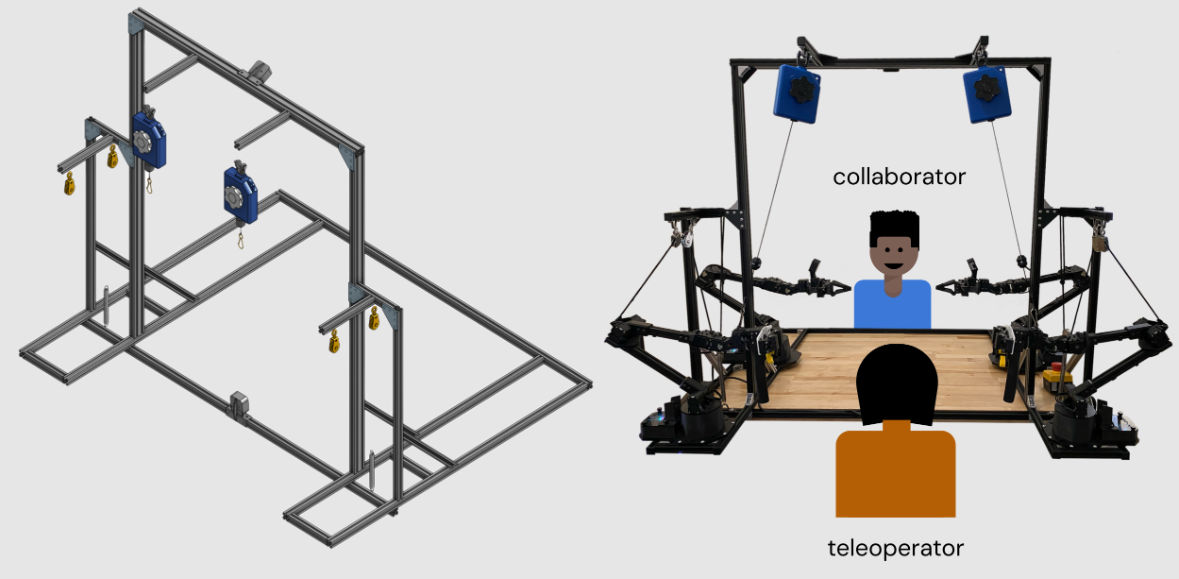

ALOHA 2 disrupts that paradigm. This isn’t just another research project—it’s a bold step toward democratizing robotics. With an open hardware design, modular components, and the ability to match (or even outperform) expensive proprietary systems, ALOHA 2 is creating a platform where innovation isn’t limited by paywalls. It’s giving independent developers, researchers, and tinkerers the tools to push robotics forward—just like Linux did for software.

This article is for those who get it—the builders, the hackers, the ones who grew up taking things apart just to see how they worked. We’ll dive deep into the problems with traditional teleoperation, the breakthroughs ALOHA 2 brings, and how open hardware is setting the foundation for the future of robotics. If you’ve ever dreamed of hacking together your own precision robotic system, this is where it starts.

The Cost Barrier in Teleoperation

For decades, the robotics industry has remained gated behind prohibitive costs. Industrial-grade teleoperation systems often carry price tags upwards of $100,000, driven by the need for high-precision actuators, proprietary control systems, and extensive calibration requirements.

When manufacturers talk about “industrial-grade” teleoperation, they’re really talking about a complex web of interdependent proprietary systems. A basic robotic arm with teleoperation capabilities starts at $50K-80K, but that’s just the beginning. Add in the mandatory force-feedback systems ($20K+), annual software licensing fees ($10K-15K), and specialized calibration equipment, and you’re looking at well over $100K before writing a single line of code. But the hidden costs run even deeper. Most systems require specialized training programs ($5K per operator), regular recalibration by factory technicians ($2K-3K per visit), and maintenance contracts that can run into tens of thousands annually. This isn’t just expensive—it’s a business model designed to keep users dependent on vendors for even basic modifications.

Even for research setups that often use mid-range robotic arms, it cost tens of thousands of dollars—and this limits access to well-funded institutions and corporations. This financial barrier stifles innovation and makes robotics an exclusive domain.

The Limitations of Traditional Task-Space Mapping

The high costs might be justifiable if traditional systems delivered flawless performance. Instead, they’re built on a fundamentally flawed approach: task-space mapping. This method tries to directly translate human hand movements into robot end-effector positions, which seems intuitive but creates cascading problems in practice.

The first issue is latency

Most commercial systems operate at a mere 5-10Hz control frequency, introducing a 100-200ms delay between operator input and robot response. To understand why this matters, try this experiment: watch your hand movements in a video call with a 200ms delay. Even simple tasks become exercises in frustration. Now imagine trying to thread a needle or manipulate delicate objects with that same delay.

The second problem is even more insidious: kinematic singularities

When a robot arm approaches certain configurations (like full extension), traditional inverse kinematics algorithms break down spectacularly. The system either freezes or makes unpredictable movements. Experienced operators learn to avoid these “danger zones,” but this means they’re constantly fighting the system instead of focusing on the actual task.

Perhaps most damaging is the physical toll on operators

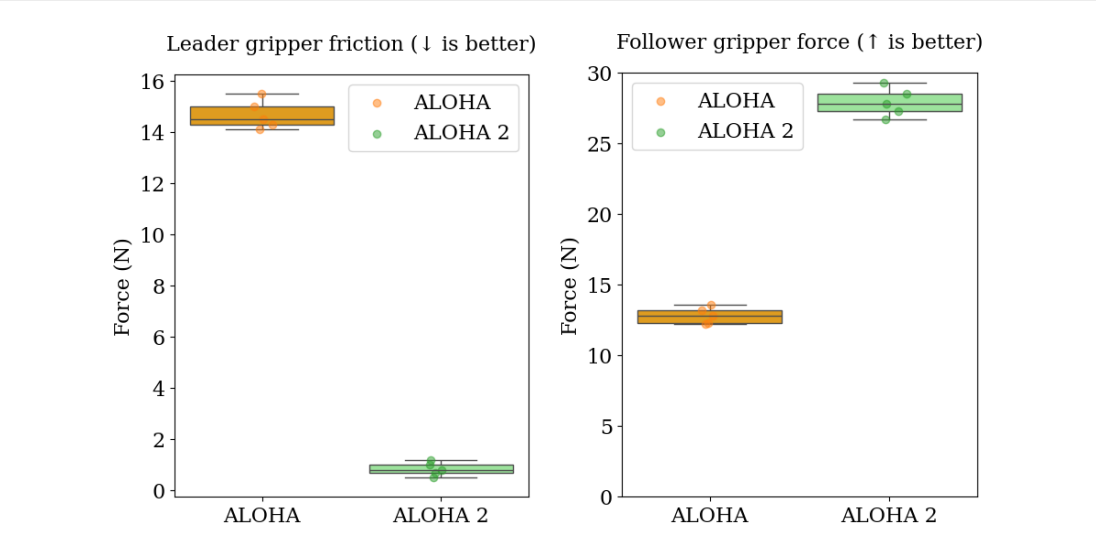

Traditional leader grippers require significant force to operate, often exceeding 14.68 Newtons (N), leading to rapid fatigue in prolonged sessions. This is equivalent to maintaining a pinch grip on a 1.5kg weight. Over an eight-hour shift, this leads to severe operator fatigue and reduces precision exactly when it’s needed most.

Dependence on Proprietary Systems

To compensate for these limitations, manufacturers rely on proprietary, high-cost solutions. Advanced robotic arms with built-in precision control mechanisms significantly improve performance but at an enormous price. Additionally, these systems are often locked behind closed software ecosystems, restricting customization and limiting accessibility to those who can afford expensive licensing fees.

Breaking Free: ALOHA 2’s Joint-Space Revolution

The fundamental problem with teleoperation isn’t only cost—it’s also about control. Traditional systems try to map human movements directly to robot end-effectors, an approach that seems intuitive but fundamentally misunderstands how humans naturally control complex movements. ALOHA 2 takes a radically different approach by focusing on joint-space mapping, and the results are transformative. If traditional teleoperation is a house of cards built on outdated control methods and vendor lock-in, ALOHA 2 is the wrecking ball.

Understanding Joint-Space Mapping

Think about how you reach for a cup. You’re not consciously calculating the exact position of your hand in 3D space—your brain naturally coordinates the movement of your shoulder, elbow, and wrist joints. This is exactly how ALOHA 2 works. It maps the leader robot’s joint angles to the follower robot’s joints in real time. This unlocks smoother, more predictable control, eliminates erratic motion scaling, and enables higher precision with less effort from the operator.

This seemingly simple shift in approach cascades into dramatic improvements across every aspect of teleoperation.

Control Frequency: From Lag to Flow

Most commercial teleoperation systems run at a sluggish 5-10Hz control frequency, introducing a 100-200ms delay between input and execution. ALOHA 2 operates at a crisp 50Hz. This isn’t just a numbers game—it’s the difference between fighting the system and feeling like a natural extension of your movements. At 50Hz, the control loop runs every 20 milliseconds, fast enough that operators report the robot feels like a direct extension of their arms rather than a remote tool they’re trying to control.

Drastic Force Reduction & Natural Leader-Follower Control

Traditional systems require a punishing 14.68N of force just to operate the grippers—imagine trying to maintain precise control while constantly squeezing a heavy spring. ALOHA 2 slashes this down to just 0.84N.

| Feature | ALOHA 2 | Traditional Systems |

|---|---|---|

| Control Frequency | 50Hz | 5 – 10Hz |

| Latency | < 20ms | 100 – 200ms |

| Leader Gripper Force | 0.84N | 14.68N |

| Follower Closing Force | 27.9N | 12.8N |

| Cost | Fraction of traditional systems | $100k+ |

Operators can work longer, with more precision, without suffering from hand fatigue. The leader-follower system ensures that every movement made by the operator is naturally and intuitively mirrored by the follower robot, allowing for an organic teleoperation experience with minimal cognitive load.

This is a fundamental shift in usability.

Sub-Millimeter Precision for Complex Tasks

Industrial teleoperation is often associated with crude, imprecise movements, requiring constant operator correction. Perhaps most remarkably, this improved usability comes with increased precision. ALOHA 2’s grippers deliver more than double the force precision of traditional systems (27.9N vs 12.8N), enabling delicate manipulation tasks that were previously impossible without extremely expensive hardware.

Control Architecture & Software Stack

ALOHA 2’s core control system is built on ROS2 (Robot Operating System 2), ensuring modularity, real-time performance, and seamless integration with robotic learning pipelines. Unlike traditional teleoperation setups that require low-level CAN programming, ALOHA 2 simplifies software complexity through Interbotix, a Python-based control library that cuts down development time by hundreds of lines of code.

Key Components of ALOHA 2’s Software Stack:

- ROS2 Middleware: Handles real-time communication between leader and follower arms.

- PID & Impedance Control: Ensures smooth and adaptive teleoperation.

- Preloaded Machine Learning Environment: Comes with Ubuntu, ROS2, and Interbotix for out-of-the-box deployment.

- Gravity Compensation Module: Reduces operator strain using passive retraction mechanisms.

The Engineering That Makes It Work

Performance is great—but have you ever thought about rethinking how robotic systems should be built? When traditional manufacturers optimize for control and lock-in, ALOHA 2 optimizes for something more valuable: freedom to innovate.

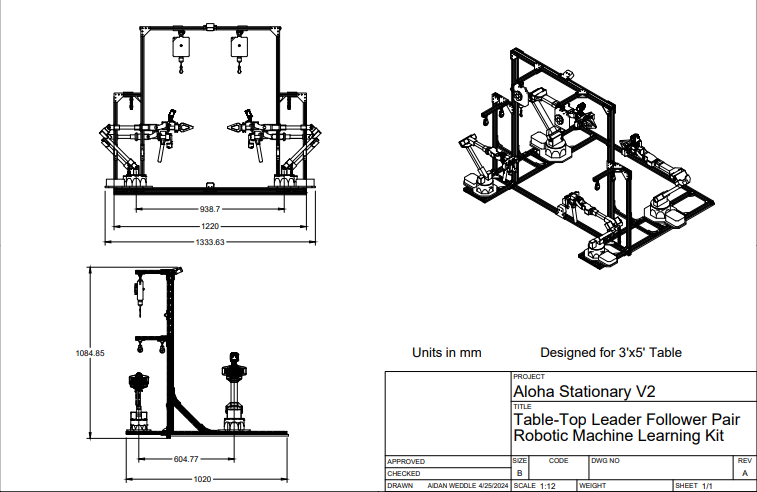

Modular Design Philosophy

Want to experiment with a new gripper design? You can 3D print it using the provided CAD files. Need to modify the control system? The software is open source and well-documented. This is one of ALOHA 2’s most defining traits; its modular architecture. Instead of being locked into a monolithic system where one faulty component can render the entire unit useless, ALOHA 2 embraces modularity at every level. From the grippers to the actuators, each subsystem is designed to be easily replaced, upgraded, or modified.

| Component | Specification |

|---|---|

| Leader Arms | WidowX 250 S – ALOHA Version |

| Follower Arms | ViperX 300 S – ALOHA Version |

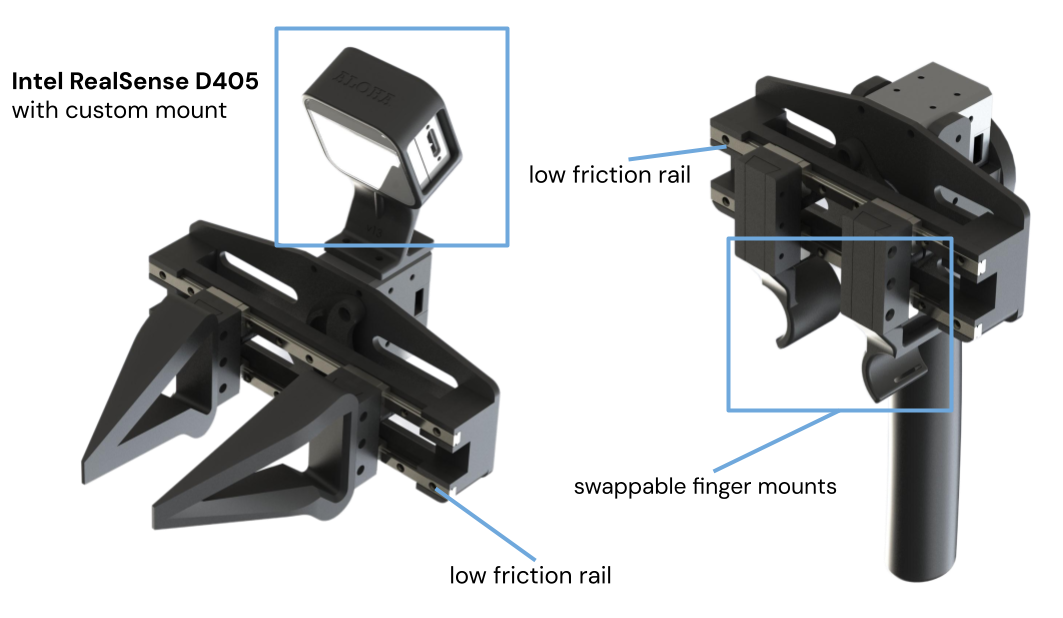

| Cameras | 4x Intel RealSense D405 |

| Chassis | Modular, Aluminum Extrusion |

| Computer | High-Performance Laptop (Preloaded w/ Ubuntu, ROS2, Interbotix) |

Strategic Use of Standard Components

Robotics manufacturers have sold us a story that precision requires proprietary parts and closed systems. ALOHA 2 flips this model by strategically incorporating off-the-shelf components wherever possible. By using standard Dynamixel motors and off-the-shelf components, it achieves sub-millimeter precision while keeping every part serviceable and replaceable. When a traditional industrial arm fails, you’re looking at weeks of downtime waiting for factory service. When an ALOHA 2 component needs attention, you can fix it with parts from any decent hardware supplier.

The Repairability Advantage

Unlike proprietary industrial robots that require specialized technicians for even minor repairs, ALOHA 2 is built to be repaired by its users. If a gripper breaks, a joint wears down, or a camera mount loosens, users don’t need to send it back to the manufacturer or wait for expensive replacement parts. Thanks to its open design and use of widely available materials, ALOHA 2 can be repaired with 3D-printed parts, generic fasteners, and local machine shop components. e.g., Imagine waiting six weeks for a factory technician vs. fixing an ALOHA 2 gripper in a few hours. This repairability feature isn’t just about cost savings—it’s about longevity.

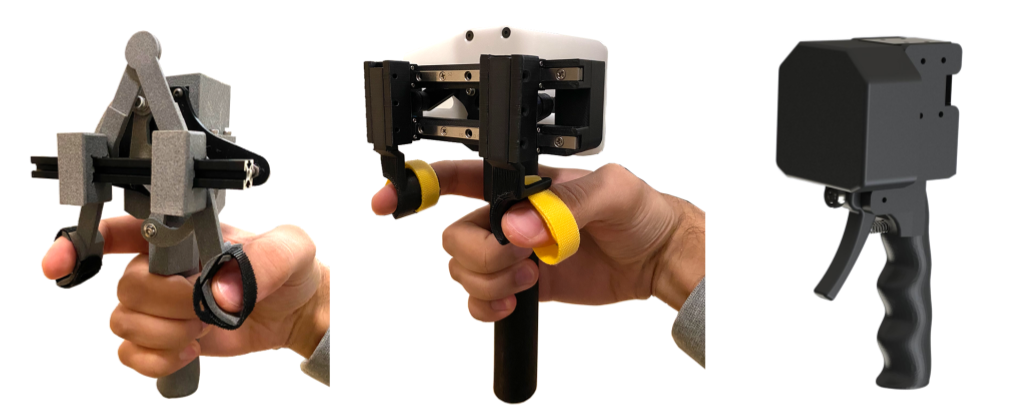

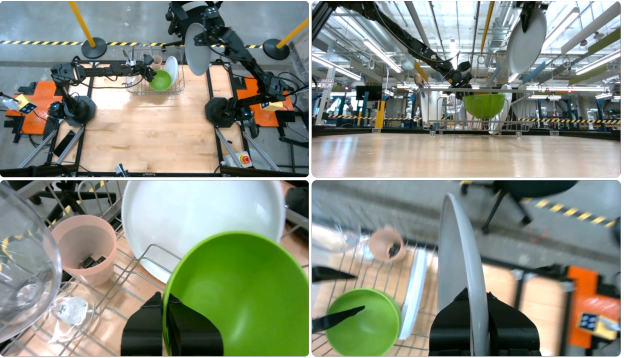

Smart Design Choices (Like See-Through Grippers)

ALOHA 2’s engineering is filled with small but critical optimizations that enhance usability. One standout example is the see-through grippers. Unlike traditional opaque grippers, ALOHA 2’s transparent finger design allows operators to visually confirm grip precision during delicate tasks. This small but significant feature improves human-robot interaction and makes fine manipulation tasks far more intuitive. In traditional robotics, this would be dismissed as aesthetics.

Other smart design improvements include:

- Low-friction rail-based grippers that drastically reduce operator fatigue.

- Passive gravity compensation that reduces strain during teleoperation.

- Swappable finger mounts for different task-specific adaptations.

Every choice in ALOHA 2’s design serves a purpose—to push performance and make robotics more accessible, repairable, and hackable.

Learning From Demonstration

Robots should learn the way humans do—not through endless lines of code, but by watching and imitating. How do you program a robot to adapt its grip strength for different objects? How do you code the subtle adjustments needed to thread a needle?

Want a robot to pick up an object? You define precise grasp points, movement trajectories, and force parameters—manually. Want it to adapt to different objects? That’s another round of parameter tuning, data labeling, and debugging. Think about how humans learn complex physical tasks. A master craftsperson doesn’t teach their apprentice by writing down mathematical equations of force and motion—they demonstrate. The apprentice watches, mimics, and gradually develops an intuitive understanding of the task.

ALOHA 2 eliminates this bottleneck. Instead of writing code for every action, users can teach the system by physically demonstrating tasks. This approach drastically reduces the need for low-level programming, making robotic learning more intuitive and accessible to non-programmers, researchers, and engineers alike.

The Science Behind Learning from Demonstration

At its core, ALOHA 2’s learning system follows a powerful principle:

- The leader-follower teleoperation system records human demonstrations in real-time.

- The collected data is processed into control policies that the robot can generalize from.

- ALOHA 2 replays, refines, and improves its performance over repeated attempts.

Each demonstration generates thousands of data points showing not just what humans do, but how they do it. When an operator demonstrates threading a needle, the system records:

- Precise joint positions and velocities

- Force adjustments during delicate manipulations

- Visual feedback from multiple camera angles

- Temporal relationships between movements

This data becomes training material for machine learning algorithms that can extract patterns and strategies from human expertise. In essence, instead of hardcoding how to fold a T-shirt, tie a knot, or manipulate delicate objects, ALOHA 2 observes and learns, and refines its skills dynamically, making it far more versatile than traditional programming-based approaches.

Scaling Robot Learning with High-Quality Data

The key to any good machine learning model is data, and ALOHA 2 is designed for large-scale data collection at an unprecedented scale. Equipped with overhead and wrist-mounted Intel RealSense D405 cameras, the system captures multi-angle visual input alongside high-frequency motion data, allowing it to learn with an unprecedented level of precision.

- 50Hz Motion Logging – Captures fine-grained joint position and velocity data

- RGB-D Vision Data – Provides depth-aware perception for complex manipulation tasks

- Diverse Demonstration Collection – Thousands of recorded demonstrations enable better generalization across different tasks

This massive dataset serves as fuel for AI-driven robotics, enabling better imitation learning, reinforcement learning, and policy optimization.

Source: An Enhanced Low-Cost Hardware

for Bimanual Teleoperation Paper

Doing Scalable Robot Learning with MuJoCo

ALOHA 2’s integration with MuJoCo (Multi-Joint Dynamics with Contact) is the bridge between human demonstration and scalable robot learning. The physics engine provides a highly accurate, low-cost environment for testing and refining robotic behaviors before deployment in the real world.

MuJoCo enables researchers to:

- Test control policies in microseconds instead of minutes, accelerating development cycles

- Explore edge cases that would be too dangerous for real hardware, reducing costly failures

- Generate synthetic training data by varying parameters of successful demonstrations, expanding dataset diversity

- Validate learned behaviors across different environmental conditions, improving real-world adaptability

Most importantly, ALOHA 2’s precise system identification ensures seamless sim-to-real transfer.

Policies refined in MuJoCo translate directly to the physical robot, eliminating the “sim-to-real gap” that has plagued robotics for decades. This guarantees that behaviors developed in simulation remain reliable in real-world execution—without endless trial-and-error tuning.

With real-world demonstrations, large-scale data collection, and high-fidelity simulation, ALOHA 2 is shaping the future of adaptive, scalable, and human-intuitive robotic learning.

The Future of Robotics is Open

For decades, robotics has been locked behind exorbitant costs, vendor-controlled ecosystems, and rigid architectures that stifle innovation. ALOHA 2 is proof that we don’t have to accept this status quo. It’s not just a more affordable robotic system—it’s a fundamentally different vision for how robotics should be built, shared, and evolved.

Traditional industrial robots are black boxes, engineered to be exclusive, expensive, and inflexible. ALOHA 2 flips that model on its head. Everything is open—from the CAD files to the control algorithms—giving developers, researchers, and engineers the ****freedom to tinker, iterate, and build on top of existing work. No more waiting for manufacturers to release updates. No more being locked into proprietary hardware. If you want to modify something, you can. If you want to improve something, you will.

This is the Linux moment for robotics—the point where an open-source platform becomes powerful enough to rival proprietary systems while remaining accessible enough to democratize an entire industry. ALOHA 2 delivers sub-millimeter precision, effortless teleoperation, and large-scale robot learning—all at a fraction of the cost of traditional systems. It outperforms its competitors not because it’s locked behind corporate secrecy, but because it’s open to collaboration from the brightest minds in robotics. This is what real innovation looks like—no artificial barriers, no paywalls, just a growing ecosystem of builders pushing robotics forward. DeepMind, Stanford, and robotics labs worldwide are already proving what’s possible when open-source takes center stage. Now it’s your turn.

If you’re a developer, a researcher, or someone who believes in breaking barriers rather than working around them, this is your moment. ALOHA 2 is an invitation. Check out code on GitHub. Explore the MuJoCo models. Get the hardware. Experiment with it. Push it further. Be part of the future where robotics belongs to everyone.